Connecting via SSH Tunnel

This page walks through connecting your data sources via a direct SSH tunnel

Getting Started with SSH Tunnels

Tunnels require you to run an SSH server process (SSHD) on a host accessible from the public internet. These hosts are often referred to as jump hosts or Bastion hosts and can be set up in nearly all cloud providers. The sole purpose of these host is to provide access to resources in a private network from an external network like the internet.

Set Up

There are three main steps to set up a tunnel:

Configure a host in your environment that is accessible from the public internet. Make sure the Secoda IP address is whitelisted.

Create a Tunnel in Secoda and add in the configuration details from the host (

SSH Username,SSH Hostname,Port). Once you submit these details, a Public Key will be shown.Add the Public Key to the

authorized_keysfile in your host.

AWS

Create an EC2 instance from the AWS Management Console in a public subnet in the same VPC as the resource that you'd like to integrate with Secoda.

Add the SSH key from Secoda.

Create a Security Group for this instance, and add an inbound rule for the Secoda IPs.

Make sure the EC2 instance has access to the resource that you'd like to integrate with Secoda. This might mean adding an inbound rule for the IP of the EC2 instance to the database or source that you're integrating with Secoda.

Azure Cloud

Create a Virtual Network from the Azure console, that has access to the database or datasource that you'd like to integrate with Secoda. Make sure the Azure firewall is enabled for this network.

Create a Virtual Machine. This machine is acting as the jump server. This VM does not need an public access, but must have access to the database or datastore that is meant to integrate with Secoda.

Go to your firewall rules, and add a NAT rule.

Protocol should be set to TCP

Source IP address should be set to the Secoda IP Address

Destination IP address should be the IP address of your Firewall

Default Port is 22

Translated IP address should be the IP address of the Virtual Machine

From within your instance, you'll need to create a user and set up the SSH key provided by Secoda. The follow commands are recommended to do this:

Connecting your Integration using a Tunnel

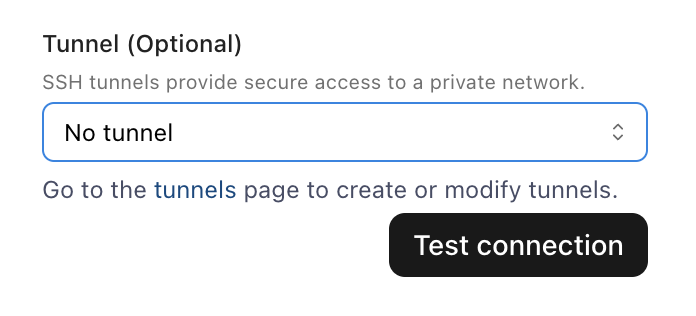

Once your tunnel has been made, navigate to the source that you're integrating with. On the connect page, add the credentials for the datasource.

At the bottom of the connect page, you'll see the option to add a tunnel. Click on the arrow to see a drop down menu with your recently created Tunnel. Select this tunnel, and click Test Connection to complete your integration setup!

Troubleshooting

If you're having trouble establishing a connection with a standard tunnel, check the following:

Whitelist the Secoda IP Ensure that the Secoda IP is whitelisted on your Bastion host.

Verify the Public Key Confirm that the public key provided during tunnel creation is correctly added to the

~/.ssh/authorized_keysfile of the user on the Bastion host.Check Permissions on SSH Files Ensure that the permissions on SSH-related files and directories are set correctly:

~/.sshdirectory:0700~/.ssh/authorized_keysfile:0600

Note: The permissions for

authorized_keysshould be0600(not0644) to maintain strict security compliance.Concurrency

To improve the number of concurrent connections using a single SSH tunnel please refer to Improve Concurrency on your SSH Bastion

Test Network Connectivity Verify that the Bastion host can connect to your data source. Replace

$data_source_hostand$data_source_portwith the actual hostname and port of your data source.

Check that you can use the bastion host from your personal machine. You will need to use your own private and public keys, not the public key from the above step. Replace the values where appropriate.

Last updated

Was this helpful?